IoT Security Fundamentals—Part 3: Ensuring Secure Boot and Firmware Update

Contributed By DigiKey's North American Editors

2020-06-18

Editor’s Note: Despite the proliferation of IoT devices, securing these devices remains an on-going concern, to the degree that security challenges can be a barrier to adoption of connected devices in Industrial IoT (IIoT) and mission-critical applications where corporate and personal data may be compromised in the event of a successful attack. Securing IoT applications can be daunting, but in reality, IoT device security can be built upon a few relatively straightforward principles that are supported by hardware security devices. By following well-established security practices, these concerns can be addressed. This multi-part series provides practical guidance to help developers ensure best practices are followed from the outset. Part 1 discusses the cryptographic algorithms underlying secure designs. Part 2 discusses the role of private keys, key management, and secure storage in secure IoT designs. Here, Part 3 examines the mechanisms built into secure processors to mitigate other types of threats to IoT devices. Part 4 identifies and shows how to apply security mechanisms in advanced processors to help ensure the isolation needed to mitigate attacks on the runtime environment of IoT devices. Part 5 describes how IoT security continues from IoT devices through higher level security measures used to connect those devices to IoT cloud resources.

Used in combination, hardware-based cryptography and secure storage provide essential capabilities required to implement secure Internet of Things (IoT) designs. Once deployed, however, IoT devices face multiple threats designed to subvert those devices, to launch immediate attacks, or for more subtle and advanced persistent threats.

This article describes how developers can enhance security in IoT devices, using a root of trust that builds on underlying security mechanisms to provide a trusted environment for software execution on secure processors from Maxim Integrated, Microchip Technology, NXP Semiconductors, and Silicon Labs, among others.

What is root of trust and why is it needed?

Cryptographic methods and secure keys are critical enablers for security in any connected device. As noted in Part 1 and Part 2 of this series, they provide fundamental mechanisms used by higher level protocols to protect data and communications. Protecting the system itself requires developers to account for vulnerabilities that can affect system operation and software execution in embedded systems.

In a typical embedded system, a system reset due to a power failure or critical software exception eventually engages the software boot process to reload a firmware image from non-volatile memory. Normally, software reboot is an important safety mechanism used to restore the function of a system that has become accidentally or intentionally destabilized. In connected systems, where hackers use a variety of black hat tools to compromise software, security specialists often recommend reboot to counter intrusions affecting software execution. For example, in 2018 the FBI recommended that consumers and business owners reboot their routers to thwart a massive hacking campaign then underway.

In practice, reboot is no guarantee of system integrity. After reboot with a compromised firmware image, the system still remains under control of the hacker. To mitigate these kinds of threats, developers need to ensure that their software runs on a chain of trust that builds on a root of trust established at boot time and extends through all layers of the software execution environment. The ability to achieve this level of security depends critically on ensuring that the boot process starts with trusted firmware.

Verifying firmware images for secure boot

In an embedded system, the host processor loads a firmware image from flash into main memory and begins executing it (or begins executing it directly from flash with execute-in-place (XIP) capability). If hackers have compromised the firmware image, the boot process results in a compromised system.

To verify firmware integrity before booting with it, developers use a code signing process that begins early in the supply chain. Within a secure facility, the system's firmware image is signed with a private key from a private-public key pair created with a cryptographically robust algorithm such as Elliptic Curve Digital Signature Algorithm (ECDSA). Although the private key never leaves the facility, the system public key ships with the system. During boot, the processor applies this system public key to verify the firmware signature before using the image.

Of course, the process just described leaves the public key itself vulnerable, and by extension, it leaves the system firmware vulnerable to unauthorized replacement. If the public key remains unprotected in the embedded system, hackers could potentially replace it with a public key from a private-public key pair they generated themselves. If they replace the system's firmware image with malicious firmware signed with the associated private key in their possession, the compromised firmware signature passes the verification process and the boot process proceeds, resulting in a compromised system.

For this reason, secure systems rely on a valid public key that is provisioned in a secure element within the security facility. Security ICs such as Maxim Integrated's DS28C36 and Microchip Technology's ATECC608A provide both the secure storage of a traditional secure element, as well as secure execution of authentication algorithms—such as ECDSA—for firmware signature verification.

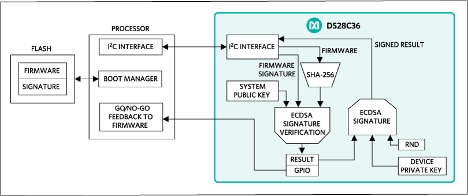

Prior to boot, the host processor can send the firmware via a serial interface to the DS28C36, for example. In turn, the DS28C36 uses the system public key provisioned earlier in the secure facility to verify that the firmware signature was indeed created with the associated private key in the same secure facility. Finally, the DS28C36 signals the verification result to the host processor, which proceeds to load the firmware image if the signature is valid (Figure 1).

Figure 1: Developers can use security ICs such as the Maxim Integrated DS28C36 to verify firmware signatures to prevent the host processor from booting compromised firmware. (Image source: Maxim Integrated)

Figure 1: Developers can use security ICs such as the Maxim Integrated DS28C36 to verify firmware signatures to prevent the host processor from booting compromised firmware. (Image source: Maxim Integrated)

A more secure boot process protects the firmware image to eliminate any concern about compromised keys or images. Using secure storage and cryptography accelerators, effective secure boot capabilities are built into a growing number of processors including Silicon Laboratories' Gecko Series 2 processors, NXP's LPC55S69JBD100, Maxim Integrated's MAX32520, and Microchip Technology's ATSAML11D16A, among others. Using these capabilities, this class of secure processors can provide the root of trust required to create a trusted environment for execution of system and application software.

Providing a root of trust through secure boot

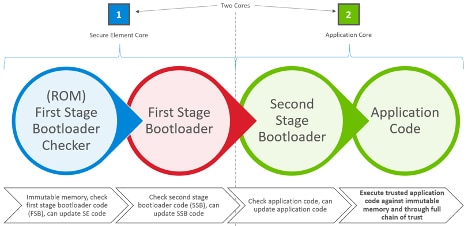

Secure processors in this class provide secure boot options designed to ensure the integrity of the firmware image underlying the root of trust. For example, Silicon Laboratories' EFR32MG21A and EFR32BG22 Gecko Series 2 processors build this root of trust through a multistage boot process based on a hardware secure element and virtual secure element (VSE), respectively (Figure 2).

Figure 2: Silicon Laboratories' Gecko Series 2 EFR32MG21A processor uses an integrated hardware secure element in the first stage of its multistage boot process (shown here), while the EFR32BG22 initiates its multistage boot process with a virtual secure element. (Image source: Silicon Laboratories)

Figure 2: Silicon Laboratories' Gecko Series 2 EFR32MG21A processor uses an integrated hardware secure element in the first stage of its multistage boot process (shown here), while the EFR32BG22 initiates its multistage boot process with a virtual secure element. (Image source: Silicon Laboratories)

In the EFR32MG21A, a dedicated processor core provides cryptographic functionality along with a hardware secure element for secure key storage. Supported by this dedicated capability, the processor initiates the boot process using code stored in read-only memory (ROM) to verify the first stage bootloader (FSB) code. Once verified, the FSB code runs, which in turn verifies the code signature of the second-stage bootloader (SSB). The boot sequence continues with execution of the verified SSB, which in turn verifies the signature of the application code, which typically includes both system-level code and the higher level application code. Finally, the verified application code runs, and system operations proceed as required by the application.

Because this process starts with ROM code and runs only verified FSB, SSB, and application code, this approach results in a verified chain of trust for code execution. Because the first link in this chain of trust relies on ROM code that cannot be modified, each subsequent link in the chain extends this trusted environment. At the same time, this approach allows developers to safely update application code, and even the first- and second-stage bootloader code. As long as each code package provides a verified signature, the trusted environment remains intact.

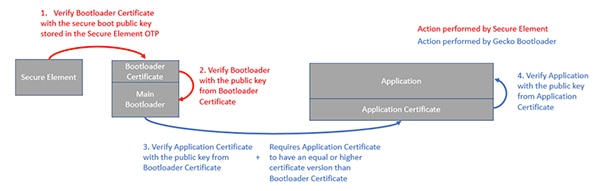

Processors that provide this kind of secure boot with a root of trust typically support multiple modes and options. For example, Silicon Laboratories' Gecko Series 2 processors provide a stronger certificate-based secure boot capability.

Used in routine public key infrastructure (PKI) transactions, certificates contain the public key along with a reference to one or more associated certificates that ultimately point to a root certificate granted by a certificate authority (CA). Each certificate in this chain serves to verify the certificate(s) below it, resulting in chain of trust based on a trustworthy CA. Browsers rely on this chain of trust during the authentication phase of the Transport Layer Security (TLS) to confirm the identity of Web servers. In the same way, embedded systems can use certificates to confirm the identity of the source of bootloader or application code. Here, the multistage boot process proceeds as described earlier but with additional verification of the certificate associated with each stage (Figure 3).

Figure 3: Silicon Laboratories' Gecko Series 2 processors enhance system security by verifying certificates for public keys used during signature verification at each stage of the boot process. (Image source: Silicon Laboratories)

Figure 3: Silicon Laboratories' Gecko Series 2 processors enhance system security by verifying certificates for public keys used during signature verification at each stage of the boot process. (Image source: Silicon Laboratories)

Other processors, such as the NXP LPC55S69JBD100, support a number of different options for the firmware image. Besides signed firmware images, these processors support boot images using the device identifier composition engine (DICE) industry standard from the Trusted Computing Group. A third option allows developers to store images in special regions of processor flash that support the PRINCE cipher, which is a low-latency block cipher that can achieve security strength comparable to other ciphers but in much smaller silicon area. Implemented in the LPC55S69JBD100, the PRINCE cipher can perform decryption on-the-fly of encrypted code or data stored in the processor's dedicated PRINCE regions of flash. Because the secret keys used for decryption are accessible only to the PRINCE cryptography engine, this decryption process remains secure. In fact, those secret keys are protected by a key encryption key (KEK) generated by the LPC55S69JBD100's physical unclonable function (PUF) feature. (For more on PUF and KEK usage, see Part 2.)

This approach provides developers with the ability to store additional firmware images—a capability required to provide IoT devices with firmware over-the-air (FOTA) update methods without the risk of "bricking" the device. If the processor can use only one location to store firmware images, a faulty firmware image can send the processor into an indeterminate or locked state that locks up, or bricks, the device. By storing firmware images in the LPC55S69JBD100's PRINCE-enabled flash regions, developers can use back-off strategies that restore a previous working version of firmware if the new version boots into a nonfunctional state.

Because all of these new firmware images must pass the signature verification checks required in the underlying boot process, developers can take full advantage of secure FOTA to add new features or fix bugs without comprising the system or its chain of trust.

Conclusion

System and application-level security requires an execution environment that only allows authorized software to operate. Although code signature verification is an essential feature in enabling this type of environment, secure systems need to draw on a more comprehensive set of capabilities to build the chain of trust required to ensure trusted software execution. The foundation for these trusted environments lies in a root of trust provided through secure boot mechanisms supported by secure processors. Using this class of processors, developers can implement secure IoT devices able to withstand attacks meant to cripple software execution in a system or hijack the system entirely.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.